As HR teams continue to be spread thin across the many different functions of their role, the pull to experiment with AI tools that can help automate operational tasks continues to get stronger.

The AI “hype” isn’t just something HR professionals are feeling anecdotally, either.

During an April 2024 webinar SixFifty (an HR compliance software and AI-supported employment law research tool) hosted on employment law issues with AI, when we asked our audience about AI usage within their organization, 35% of attendees said that they had no plans to use AI tools as part of their HR process.

Just over a year later, we asked a similar question to over 600 HR professionals—and the number of teams with no plans to use AI had dropped from 35% to just 7%.

“When you look the rapid growth of AI tools—combined with HR typically being a cost center within businesses—it’s not at all surprising that more and more orgs are coming around on adopting AI. The time savings from using generative tools are looking really attractive for HR teams, who are already spread thin having to wear dozens of different hats in their roles.”

– Kimball Parker, CEO at SixFifty

For those holding the purse strings for the business, the idea of being able to reduce spend on that new compliance hire or retaining outside counsel might seem appealing from a cost-savings perspective, but there are a handful of things you’ll want to consider first.

But that does not mean all HR teams should be foot-on-the-gas-pedal when it comes to AI—especially when it comes to a particularly sensitive, risk-laden area of HR like employment law compliance.

Explore below for our guide on how HR teams can effectively use AI tools for employment law compliance work—and perhaps more importantly, how they should avoid using them.

A quick caveat: The risks of HR using AI for employment law compliance work

For general business uses—like improving the tone of voice of an email, summarizing the key takeaways of a meeting, generating a spreadsheet equation to help sort through employee data, or creating an image to support an employee advocacy post—generative AI doesn’t carry a lot of risk for HR teams.

This is because (in general, of course) the tool isn’t ingesting sensitive, protected information or producing something that requires pinpoint accuracy, at the risk of financial penalty to the company if it were to be wrong.

This all changes when you start to think about employment law compliance.

Staying on top of the hundreds of law changes that happen each year at the state and federal level is challenging, especially when you have employees in multiple states with different requirements and thresholds for minimum wage, sick leave, FMLA leave, pay transparency, non-compete clauses, employee notices, and more. The more states you are in, the more complex it gets.

It can get expensive, especially when you need to retain outside counsel to review policies and refresh handbooks. There’s a reason many HR teams still rely on a lawyer to vet and review policies, though.

“I would be extremely wary of saying, ‘We’re going to stop using a lawyer or thorough HR review of our policies and just use AI instead.’ You might be introducing a cure that’s worse than whatever illness your org is currently dealing with.”

– Ryan Parker, Chief Legal Officer at SixFifty

Consider, for example, that the average cost of successful pay-related claims cost companies over $40,000 per instance. If a tool like ChatGPT provides you inaccurate or out-of-date legal info and you take action on that info in the creation of policies that are against the law, you are on the hook.

In short: Especially for free or cheap tools, there is simply too much risk for most HR teams to safely go all-in on replacing their human-governed compliance processes with AI—and we don’t recommend that teams do it without clear, in-depth procedures, policies, and filters in place (which we’ll discuss later on in this article).

What you shouldn’t use AI for in employment law compliance

While AI tools like ChatGPT can be incredibly useful for HR work, there are some areas where using generative AI poses more risk than reward—especially when it comes to legal compliance. Here are key scenarios where HR teams should avoid using AI (or at least use it with caution), and why.

1. Creating an employee handbook from scratch

AI-generated handbooks are often incomplete and overly generic. While ChatGPT can produce a document that looks official, it likely misses critical content required by law or best practice. For example, it may skip state-specific leave policies, mandated language around at-will employment, or components like required employee disclosures upon hiring.

Even companies with advanced AI tools encounter challenges ensuring pinpoint accuracy. Using methods like retrieval-augmented generation (RAG) models that reference only trusted, human-vetted legal sources, you can lower the rate of inaccuracies and hallucinations—but not eliminate them altogether yet. It’s simply too risky to offload the creation of your handbook to generative AI.

2. Drafting legally required policies

Policies related to things like leave laws, pay transparency, arbitration, separation, discrimination, and non-competes often carry strict state and federal requirements—and associated financial penalties for non-compliance. Getting them wrong isn’t just a matter of bad optics; it can expose your company to lawsuits, audits, and significant fines.

In Minnesota, for example, employers who misclassify an employee as a contractor under federal or state law, fail to report an employee to a government agency, or require an employee to sign an agreement improperly identifying them as a contractor can be fined up to $10,000.

AI can’t always account for nuances in the law, nor can it stay reliably current as legislation evolves. Employment laws change quickly, and policies must reflect those changes. A chatbot can’t reliably do complex legal analysis like distinguishing between enforceable and unenforceable clauses in a separation agreement—especially when those distinguishing factors have second-order effects on a company’s decision-making. For these areas, legal accuracy is non-negotiable and a lawyer should be used.

3. Drafting hiring or separation agreements

Agreements are legally binding documents, and state-specific laws govern what can—and cannot—be included in them. For example, some states prohibit NDAs in certain situations or make it illegal to include non-competes in most employment contracts.

Even including unenforceable language—whether or not you ever intend or attempt to enforce it—can result in penalties, as is the case with unlawful non-competes in Colorado. It’s simply not safe to rely on AI to generate agreements that may include prohibited or outdated terms. These are documents that should be reviewed or generated with legal oversight every time.

4. Researching which laws apply to your business

Using AI to research which laws you’re required to follow can be a good starting point—but can be incredibly risky if it’s your only point of reference for your business.

ChatGPT’s training data only goes so far, and it doesn’t always reflect recent legal developments, pending legislation, or jurisdictional nuances. It also can’t account for things like your company’s headcount, locations, industry, employee classification makeup, and other factors that determine applicability to your business.

AI can also confuse “proposed” or “scheduled” laws with “effective” ones, or provide outdated information on legislation that has since been repealed or blocked. For timely and accurate guidance, HR teams need real-time legal updates, not static or generic information that is no more reliable than what you could find from a casual Google search.

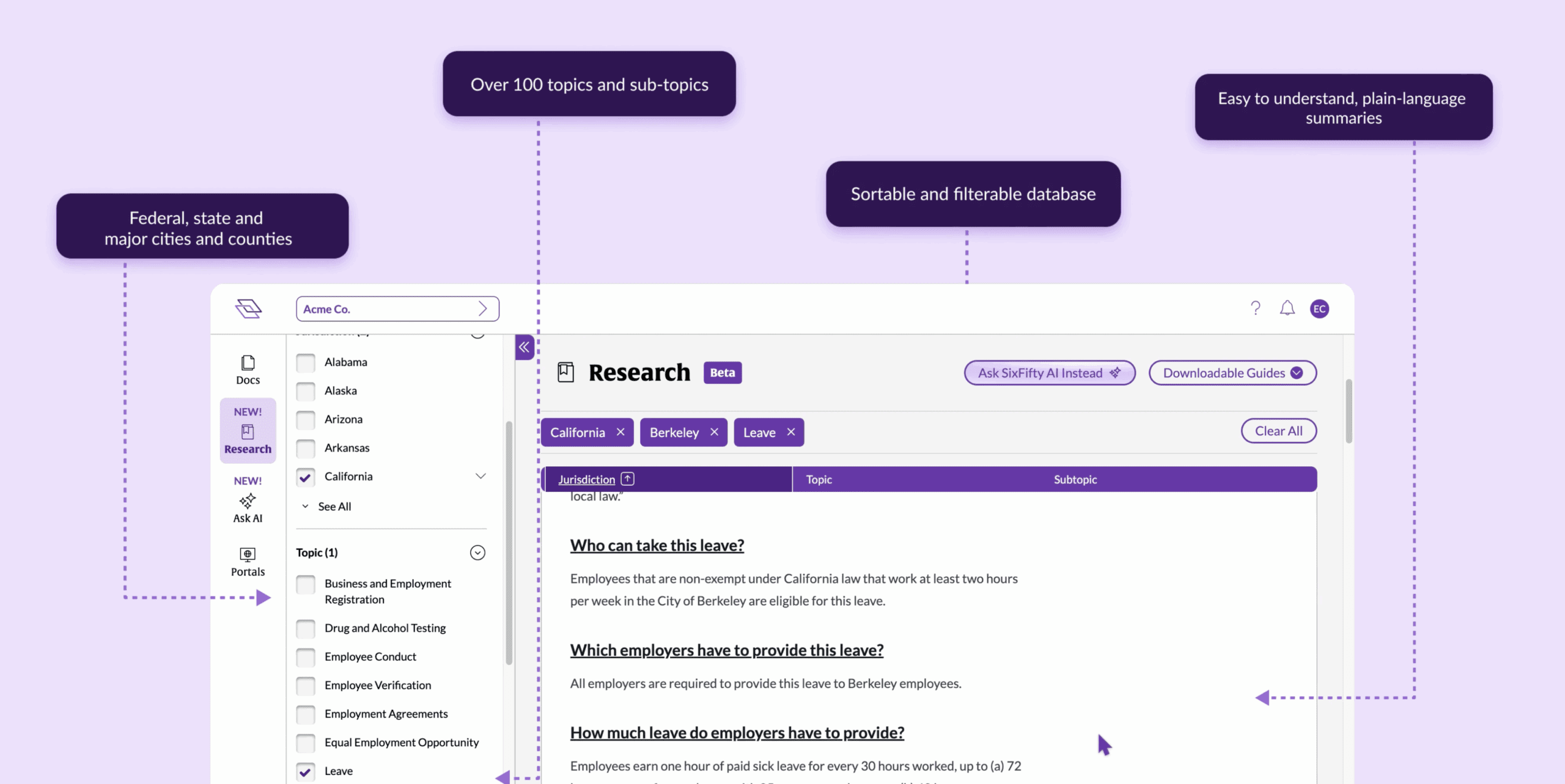

AI tools built on human-curated legal sources—like Ask SixFifty AI, which is powered by SixFifty Research, one of the largest vetted, 50-state legal research libraries available anywhere—offer safer, more reliable research experiences that open-ended tools like ChatGPT aren’t designed to provide.

5. Creating required trainings, notices, or labor posters

Regulations dictate not only what has to be communicated to employees, but also how and where. For example, labor posters must meet specific font sizes, be posted in designated areas, and include exact legal phrasing. Required trainings—such as those on harassment or discrimination—must meet certain content and duration requirements.

Using ChatGPT to create these materials risks leaving out mandatory elements or failing to meet compliance standards. For required content, it’s best to defer to trusted legal or government-issued resources, or a reliable third-party training provider.

How you can safely use AI for compliance work (with conditions)

While there are clear boundaries for where generative AI shouldn’t be used in employment law, there are also many ways it can be leveraged—safely and effectively—with the right guardrails. Here are some smart, lower-risk ways HR teams can use AI to lighten your compliance workload.

1. Reviewing an extremely out-of-date handbook for potential policy gaps

If you already have a professionally drafted handbook and just want to update or audit it, ChatGPT can help you identify what might be missing or outdated. For example, you might paste in your leave policy and ask if any additional types of leave should be considered.

Keep in mind: AI can point you in the right direction, but it’s not a substitute for legal research. Always verify flagged gaps using a trusted compliance resource (like SixFifty Research) or consult legal counsel before making any actual policy updates or additions.

2. Drafting non-legally-required policies

Generative AI is great for creating templates for policies that aren’t mandated by law, but rather determined at your company’s preference; think Pets in the Workplace policies, Social Media policies, or Dress Codes. These can serve as culture-setting documents, and ChatGPT can help you write them clearly and efficiently.

Keep in mind: Even non-required policies can accidentally create legal problems. A “Politics in the Workplace” policy, for instance, might infringe on employees’ rights under the NLRA if you attempt to restrict a protected activity.

Be cautious not to include language that could be considered discriminatory or overly restrictive—and as always, our recommendation is to never publish a draft straight from a generative AI tool without human review.

3. Creating quizzes or knowledge checks to confirm trainings have been completed

AI is a handy tool for writing comprehension questions or acknowledgment language based on existing training content. If you already have a required anti-harassment training, for example, ChatGPT can help you draft a quick quiz to check retention.

Keep in mind: The AI can help you draft the questions, but it shouldn’t be used to create the training content itself—especially when that training must meet state-mandated standards.

4. Drafting internal comms to educate employees on compliance issues

Sometimes the hardest part of compliance is helping employees understand their rights and obligations—so they can use the resources, reporting channels, and leave that they have available to them. AI can help HR teams write better emails, Slack messages, or intranet updates that explain policy changes, required acknowledgments, or training deadlines in plain English.

Keep in mind: Just like any corporate communication, internal messages should still be reviewed for accuracy and clarity—especially when referencing legal obligations. But if you struggle with tone, brevity, or writing confidence, AI can give you a helpful boost.

And also remember: Garbage in, garbage out. If you’ve taken shortcuts on your handbook and policies (i.e. using AI to generate them from scratch) and inaccuracies are present, you could be creating even more confusion when you communicate those policies to employees.

The bottom line: Avoiding risk should be HR’s #1 concern when using AI

Letting AI do some of the heavy lifting can be a game-changer for busy HR teams—but only when it’s used thoughtfully. For anything involving legal risk, or state and federal compliance, it’s essential to supplement AI-generated content with expert review and verified sources.

Used wisely, AI can be a powerful partner (but not a replacement) for employment law expertise.